Data-driven workflows to improve supply chain visibility

Data-driven workflows connect sensor data, partner systems, and analytics to create continuous insight across production, transport, and inventory. By structuring telemetry and automating decision points, organizations can reduce blind spots, support predictive approaches, and measure energy and sustainability outcomes more consistently.

Modern supply chain visibility depends on structured data flows that turn raw signals into timely decisions. Instead of episodic reports, data-driven workflows consolidate telemetry from devices, operational systems, and partners into repeatable processes. This makes it easier to detect bottlenecks, forecast disruptions, and quantify energy and sustainability impacts. Clear data lineage, consistent tagging, and governed analytics ensure that insights are actionable and traceable across teams and systems.

How does predictive analytics improve supply chain visibility?

Predictive analytics uses historical records and streaming inputs to forecast delays, demand shifts, and inventory gaps. Machine learning models ingest telemetry and transactional data to produce risk scores and lead-time estimates, enabling planners to act before issues escalate. When analytics outputs are integrated into workflows, exceptions can trigger automated responses or prioritized human review. Regular model retraining and validation against field telemetry reduce drift and keep forecasts aligned with changing operational realities.

What role do IoT devices and telemetry play?

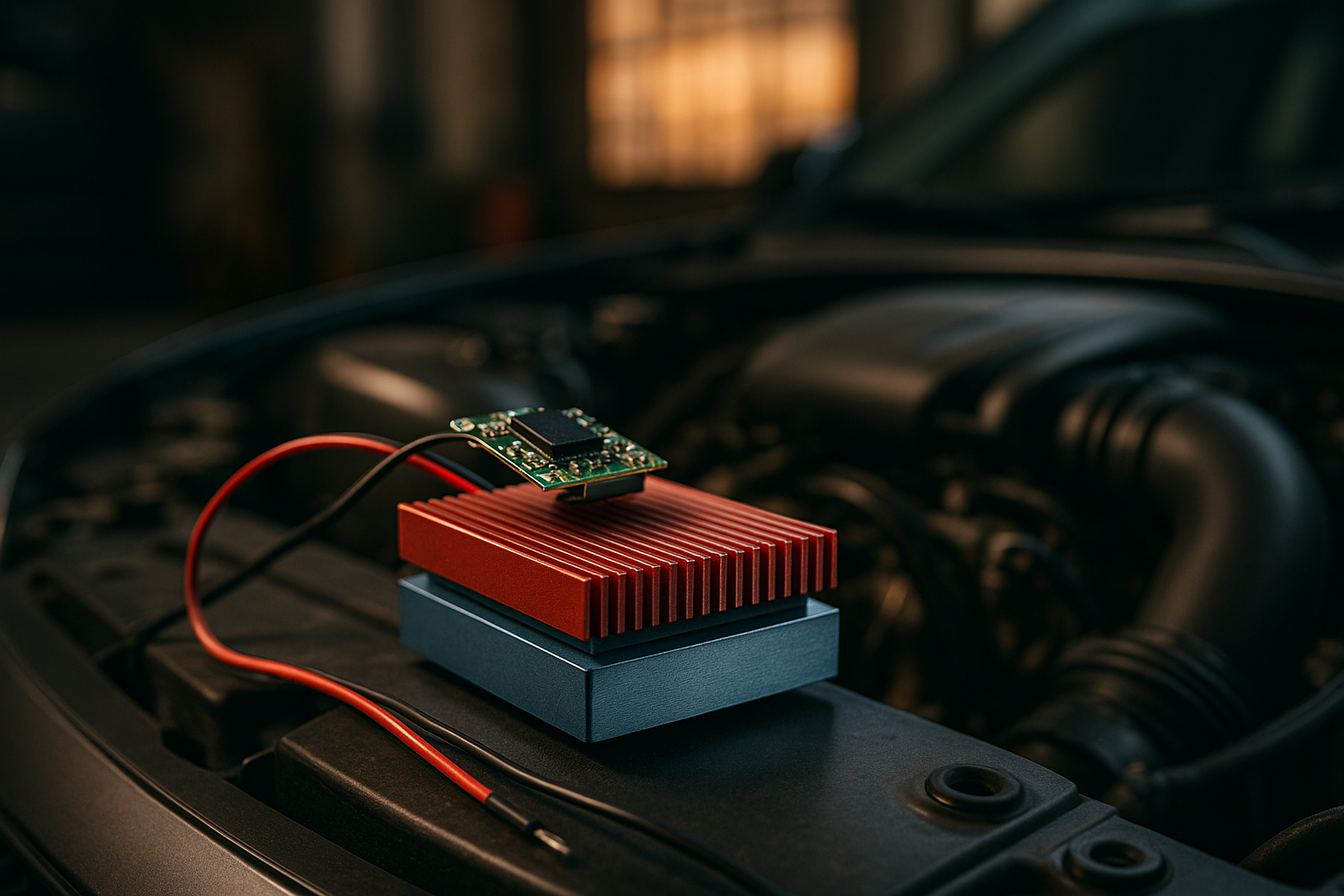

IoT devices provide the granular signals that feed visibility workflows: location, temperature, vibration, and energy consumption are typical examples. Consistent telemetry collection and timestamping enable correlation across transport, warehousing, and production. Edge filtering can reduce bandwidth while preserving critical events; meanwhile centralized storage supports historical analysis and compliance reporting. Proper device management, including firmware updates and authentication, is essential to maintain data quality and trust in downstream analytics.

Can edge computing and digital twin support operational decisions?

Edge computing enables low-latency processing for local decision logic, such as stopping machinery on unsafe vibration thresholds or rerouting shipments in transit. Pairing edge capabilities with a digital twin—a virtual model of assets or processes—allows simulation of scenarios and validation of control strategies before they impact live systems. Digital twin models help teams evaluate retrofitting options, test automation logic, and estimate energy and efficiency improvements in a safe, repeatable environment.

How do automation and retrofitting influence energy and efficiency?

Automation streamlines repetitive tasks such as inventory reconciliation and exception routing, reducing manual errors and cycle times. Retrofitting existing assets with sensors and simple controllers unlocks telemetry without full replacement, improving return on investment and lowering capital barriers to visibility. These measures often lead to measurable energy savings by optimizing runtimes and reducing idling. Successful initiatives balance immediate efficiency gains with maintainability, ensuring controls are documented and serviceable.

How does predictive maintenance link to sustainability goals?

Predictive maintenance schedules interventions based on actual equipment condition, lowering unplanned downtime and minimizing spare-parts inventories. Integrating maintenance telemetry with energy monitoring reveals inefficient operating modes and opportunities for tuning or component replacement that reduce consumption and emissions. Workflow-level metrics that combine asset health and energy KPIs help justify investments and track sustainability progress over time. Data governance and cross-functional alignment are required to translate maintenance signals into environmental benefits.

Why are cybersecurity and reskilling critical for visibility projects?

Improved visibility often expands the attack surface by connecting devices, networks, and cloud services. Cybersecurity practices—secure device provisioning, encrypted telemetry, identity and access controls, and patch management—must be embedded into workflows. At the same time, reskilling operators, maintenance staff, and analysts is necessary so teams can interpret analytics, manage automation, and operate digital twin simulations safely. Training programs and role-based procedures reduce operational risk and support steady adoption of new tools and processes.

Data-driven workflows are a practical path to clearer supply chain visibility when telemetry quality, analytics rigor, and governance are aligned. Combining IoT, edge processing, digital twin simulation, and automation supports predictive maintenance, energy and efficiency gains, and more resilient operations. Embedding cybersecurity and investing in reskilling ensures these gains are sustainable and reduces the chance of introducing new risks. With well-defined workflows, visibility becomes an operational capability rather than an ad hoc reporting exercise.